Mindbreeze, a leading provider of Knowledge Management and Information Insight, enables customers to leverage innovations in Generative AI for sensitive enterprise data securely. Advanced Large Language Models (LLM) combined with Mindbreeze InSpire provide exceptional customer experiences in natural language processing, text generation, and data security.

“Generative AI (GenAI) and tools like ChatGPT have taken the world by storm,” explains Daniel Fallmann, founder and CEO of Mindbreeze. “However, for these technologies to be used professionally in companies, numerous challenges must be addressed – for example, data hallucination, lack of data security, permissions, critical intellectual property issues, expensive training costs, and the technical implementation with confidential company data. Mindbreeze InSpire solves these challenges to form the ideal basis for making Generative AI the best fit for enterprise use.”

Integration and the many advantages for users

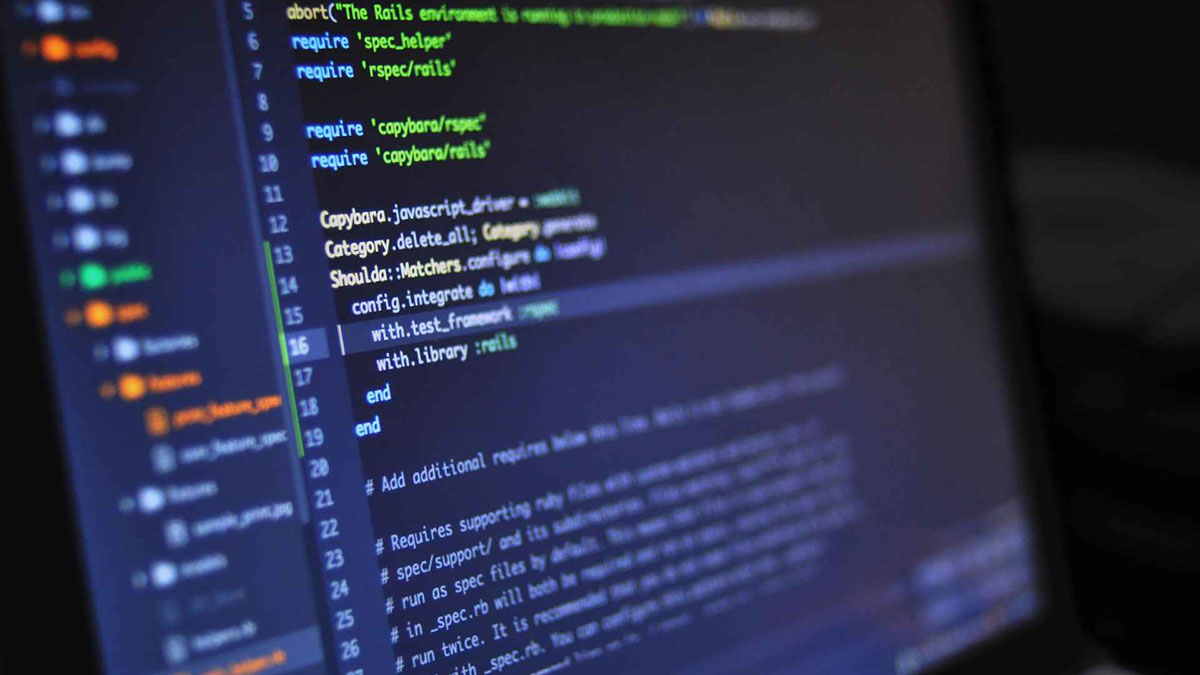

In contrast to applications such as ChatGPT, whose data origin is usually not traceable, Mindbreeze InSpire uses existing company data as the basis for machine learning to ensure that the data used to train the models always belong to the respective company and do not flow into a public model. Existing and generated content is always secure, correct, trustworthy, and, above all, traceable. Since the solution references the source in addition to the answer, users can validate the answers at any time if necessary.

Mindbreeze InSpire provides users with intelligent, informed, and personalized answers to offer companies the chance to use AI safely, responsibly, and sustainably.

Mindbreeze offers maximum flexibility through open standards

Mindbreeze leaves the selection of the preferred LLM to its customers. The Mindbreeze InSpire insight engine is delivered with pre-trained models. Due to the use of Transformer Models and open standards, models from communities such as Huggingface can be easily used. In addition, Mindbreeze offers customers qualified support in selecting a suitable LLM and the associated use cases.

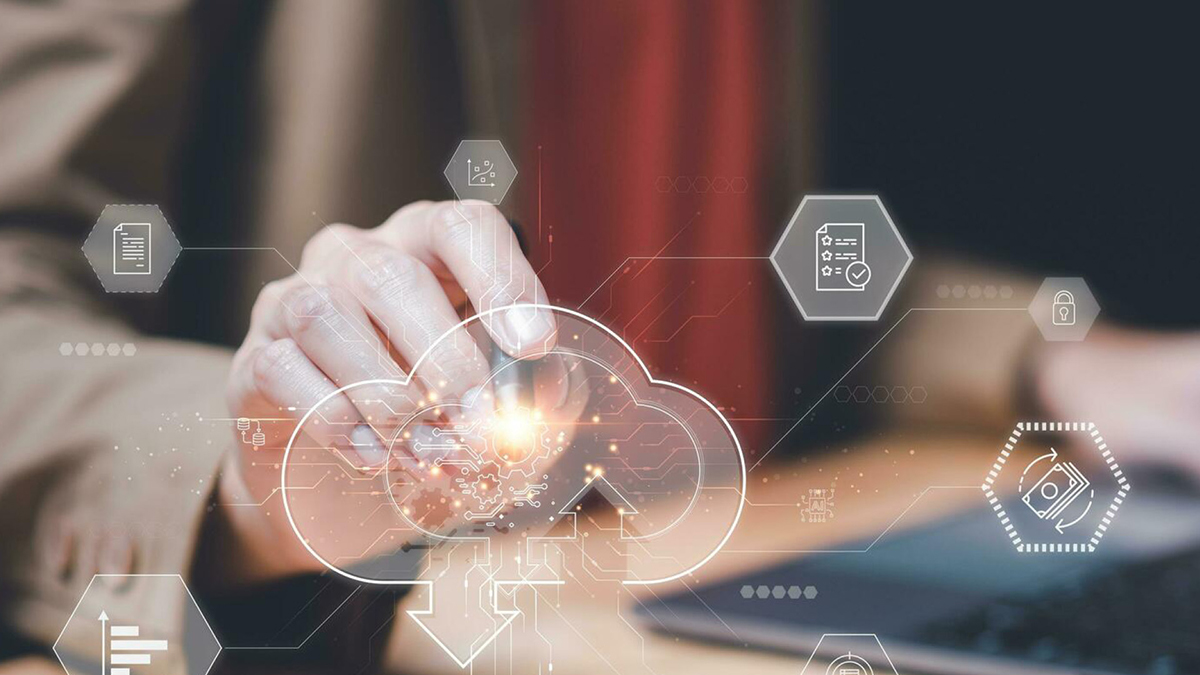

“What makes Mindbreeze unique in this area is that the models run both in the cloud and on our on-premises appliances. Customers have complete freedom of choice in selecting their LLM and where they want to process their data,” explains Jakob Praher, CTO of Mindbreeze. “Our many years of experience with highly scalable processing of huge data sets enables us to deliver customized hardware and software solutions.”

Mindbreeze has been offering a complete hybrid approach for years, resolving critical dependencies by making all of Mindbreeze InSpire’s capabilities available on-premises and in the cloud.